Part One was published last month. Click here to see it.

In last month’s article, we used a real manufacturing facility to benchmark utility costs. We started looking at several filters to understand its performance better. Initially we filtered by:

- Size of facility (Large: >600,000 GSF)

- Type of facility (Manufacturing)

- Climate type (Hot and Humid)

As a rule, you want to use as few filters as possible, as the more filters you use, the larger the data sample will have to be (in order to have enough buildings in your filter set). It is ok to test many filters, as we did last month, but keep the number to be applied simultaneously to a minimum so that you have enough buildings in your filter set to analyze properly. Thus, finding the correct filter set is critical. See last month’s article for more information on defining the correct filter set.

In our analysis last month, we looked at how our performance changed with each of these filters and concluded that the most significant filter impacting our performance was the climate type. In reality, what we are doing with these various filter options is changing our peer group for comparison purposes—by modifying the filter set, we are modifying the peer group. If you haven’t compared your facility with the right peer group, the charts will not provide a realistic picture of your benchmarked costs. Comparing data or even processes isn’t a very effective way to improve your performance unless the comparisons are made with a relevant peer group.

As a result of our analysis, let’s say you have looked at the results from enough filters and you are comfortable that you are comparing your building with the right peer group. So are we done with our analysis? On the one hand, yes, we’ve created a valid scorecard and can see where our facility stands in comparison to other similar facilities. But is there more we can do? Is there a way to improve our facility’s performance? Yes on both counts!

Getting Results: Integrating Best Practices into the Benchmarking Process

To improve our facility’s performance, we must look at the best practices being employed in facilities that are outperforming ours. We then can compare them to the best practices we have implemented in our facility. Benchmarking can be used for that as well, except we will be benchmarking best practices instead of costs. To illustrate, let’s continue with the same manufacturing facility in Florida and see which best practices it has implemented and how they compare with those of their peer group.

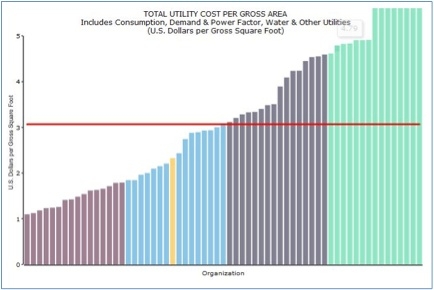

In Figure 1 below, each building is shown by a different line; ours is shown by the yellow line, and we see that the cost for our utilities is $2.33 per gross square foot (GSF). The only filter applied screens out all except hot and humid buildings. We see that the cost for our utilities is $2.33 per gross square foot (GSF). In the diagram, we have shown the 1st Quartile (16 left-most buildings), Median (horizontal red line) and 3rd Quartile points. Our building’s utility costs (yellow line) are in the 2nd Quartile midway between the 1st quartile and median. This sample has been filtered for the climate zone “hot and humid /dry”.

Figure 1 — Utility Costs per GSF filtered by hot-and-dry climate type. Provided courtesy of FM BENCHMARKING

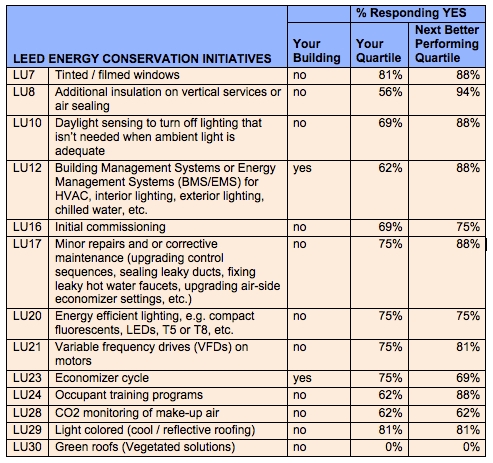

Now, we’ll look at the some of the best practices that have been implemented at our facility and by our peers in our 2nd Quartile and also those in the next better performing Quartile (1st). By looking at which best practices have been implemented by those in better-performing buildings and in our filter set, we may see some options that we could implement at our facility to improve the performance.

Listed below in Figure 2 are just a few of the best practices from the database. At our manufacturing facility we have neither tinted nor filmed the windows (Line LU7) while 81 percent of our peer group’s participants in our Quartile have done so, and 88% of the 1st quartile participants have implemented this item. So this seems as if it may be a very good best practice for us to implement.

But there may be an even better opportunity. Note item LU8 (Additional insulation on vertical surfaces or air sealing). We have not implemented this item at our facility either, and only 56% of the peer group in our quartile has done so. But when we look at the 1st quartile (Next Better Performing Quartile) we see that 94% of the participants in our peer group have done so.

Based on the above analyses, we see that certain Best Practices are likely to be done by most in the first and second Quartiles, while other best practices may be those that tend to move buildings from the second to the first performance Quartile. We should seriously consider implementing both types of best practices.

Figure 2 — Utility Best Practices filtered by hot-and-dry climate type. Provided courtesy of FM BENCHMARKING

Looking a bit further down the list, note that 81% of the participants in both our Quartile and the next better performing Quartile have implemented light-colored reflective roofs (LU29). This practice matches the percentage for tinted windows and should certainly be considered. Note that green roofs (LU30) haven’t really taken hold in this sample be careful if you are considering implementation as you may be the only one in your climate zone.

What is really happening is that the FM is able to develop very carefully a targeted peer group and then see what has been done to achieve results. This is benchmarking with integrated best practices—it shows what options you can implement to improve your building’s performance.

Looking at facilities without good peer group comparisons can be a major waste of time. Last month, we saw that you need to apply filtering tools iteratively to define the appropriate peer group for your specific situations; this month, we saw that by creating an accurate scorecard, while great for knowing where you stand when compared to others, doesn’t help your building perform better—that’s where the best practices come in.

Is there still more we can do?

In the above example, while we defined our filters and analyzed our data based on costs, we could have done it by consumption, which is an equally (if not more) valid measure. By comparing the above results for costs and best practices to those for consumption and best practices, we will have the clearest idea of which best practices to implement, as we will have removed any biases in our analysis due to differences in regional utility rates.

These types of iterative approaches are the only ways one can use benchmarking to improve a building’s overall performance and reduce costs without cutting service. This brings us to a very critical point: successful benchmarking requires much more than looking at a scorecard—to know what to do to improve building performance, one needs to be interpreting results continuously, and then seeing if there are more analyses to do that may be appropriate. In other words, successful benchmarking is not just a science—it is an art and a science as well.